In the AI hardware market, NVIDIA is undeniably the dominant force, with its A100 and H100 accelerators being in high demand.

Intel and AMD are also actively investing in related products. The former is primarily focusing on its GPU Max series, while the latter is on the Instinct MI series.

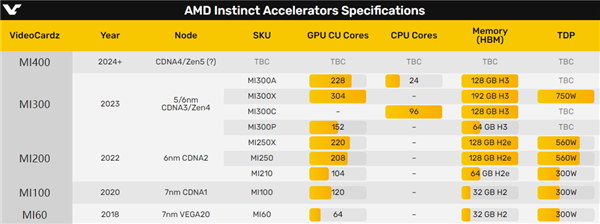

Not long ago, AMD officially launched the MI300 series accelerators. The MI300X for the first time, integrates the Zen 4 CPU and CDNA 3 GPU architectures and features up to 128GB of HBM3 memory. The MI300A is a pure GPU solution equipped with 192GB of HBM3.

It’s said that there are also MI300C and MI300P versions, with the former being a pure CPU architecture and the latter a scaled-down version of the MI300X with half the size.

Following the usual pattern, when one generation of products is released, the next one is already in active development. However, it’s rare for a CEO to confirm the name of the next generation.

AMD CEO Lisa Su recently stated that AMD continues to invest in AI, including the next-generation MI400 series accelerators and beyond.

Su also emphasized that AMD not only has a very competitive AI hardware roadmap but will also make some changes in the software.

She did not reveal more specific details, but it’s speculated that AMD might finally be making a significant overhaul of its ROCm development framework, or it will never be able to compete with NVIDIA’s CUDA.

It’s highly likely that the MI400 series will feature the new Zen 5 CPU and CDNA 4 GPU architectures, with both CPU+GPU fusion and pure GPU solutions.

Rumor has it that AMD is developing a new XSwitch high-speed interconnect bus technology to compete with NVIDIA’s NVLink, which is crucial for large-scale HPC and AI computations.

The global AI boom this year has made NVIDIA’s AI graphics cards highly sought after, and the surge in demand has led to prices spiraling out of control. For the past few months, there have been frequent reports of shortages and price hikes, with some of the most exaggerated claims suggesting that cards like the H100 have soared to 500,000 RMB, double their original price.

Another important reason for the premium on NVIDIA’s AI cards is the lack of viable alternatives on the market. Previously, NVIDIA had a near-monopoly, but thankfully, AMD’s MI300 series AI accelerator cards are set to hit the market in the second half of the year.

To compete with NVIDIA for market share, AMD has prepared thoroughly this time.

In a recent earnings call, CEO Lisa Su mentioned that they are increasing AI-related expenditures and have formulated an AI strategy, focusing on both AI hardware chips and software development.

Addressing concerns about MI300 chip capacity, Su stated that despite the tight supply chain, they have secured capacity from their supply chain partners, including TSMC’s CoWoS packaging and HBM memory, which are essential for AI chips.

AMD has stated that from chip manufacturing to packaging and components, they have ensured sufficient capacity for the MI300 series. They plan a major expansion in production from Q4 2023 to 2024 to ensure customer demand is met.